怎么查找到天猫的cookie信息?

最近很痛苦啊,因为运行程序遭遇网页重定向的问题实在不懂怎么解决啊?

有人建议我可以使用带cookie登入网页,但是很天猫的cookie到底怎么找啊,找资料半天实在没结果,恳请大神细细讲解到底怎么查天猫的cookie的信息,真的是被搞疯了。

附上spider源码:

# -*- coding: utf-8 -*-

import scrapy

from topgoods.items import TopgoodsItem

class TmGoodsSpider(scrapy.Spider):

name = "tm_goods"

allowed_domains = ["http://www.tmall.com"]

start_urls = (

'https://list.tmall.com/search_product.htm?q=%C5%AE%D7%B0&type=p&spm=a220m.1000858.a2227oh.d100&from=.list.pc_1_searchbutton',

)

#记录处理的页数

count=0

def parse(self, response):

TmGoodsSpider.count += 1

divs = response.xpath("//div[@id='J_ItemList']/div[@class='product']/div")

if not divs:

self.log( "List Page error--%s"%response.url )

for div in divs:

item=TopgoodsItem()

#商品价格

item["GOODS_PRICE"] = div.xpath("p[@class='productPrice']/em/@title")[0].extract()

#商品名称

item["GOODS_NAME"] = div.xpath("p[@class='productTitle']/a/@title")[0].extract()

#商品连接

pre_goods_url = div.xpath("p[@class='productTitle']/a/@href")[0].extract()

item["GOODS_URL"] = pre_goods_url if "http:" in pre_goods_url else ("http:"+pre_goods_url)

yield scrapy.Request(url=item["GOODS_URL"],meta={'item':item},callback=self.parse_detail,

dont_filter=True)

def parse_detail(self,response):

div = response.xpath('//div[@class="extend"]/ul')

if not div:

self.log( "Detail Page error--%s"%response.url )

item = response.meta['item']

div=div[0]

#店铺名称

item["SHOP_NAME"] = div.xpath("li[1]/div/a/text()")[0].extract()

#店铺连接

item["SHOP_URL"] = div.xpath("li[1]/div/a/@href")[0].extract()

#公司名称

item["COMPANY_NAME"] = div.xpath("li[3]/div/text()")[0].extract().strip()

#公司所在地

item["COMPANY_ADDRESS"] = div.xpath("li[4]/div/text()")[0].extract().strip()

yield item

结果:

10-15 19:20:06 [scrapy] DEBUG: Redirecting (302) to <GET https://login.taob

m/jump?target=https%3A%2F%2Flist.tmall.com%2Fsearch_product.htm%3Ftbpm%3D1%

D%25C5%25AE%25D7%25B0%26type%3Dp%26spm%3Da220m.1000858.a2227oh.d100%26from%

st.pc_1_searchbutton> from <GET https://list.tmall.com/search_product.htm?q

AE%D7%B0&type=p&spm=a220m.1000858.a2227oh.d100&from=.list.pc_1_searchbutton

10-15 19:20:06 [scrapy] DEBUG: Redirecting (302) to <GET https://pass.tmall

add?_tb_token_=KL9DqtpQ4JXA&cookie2=fc1318de70224bfb4688cb59f2166e17&t=4d43

c2cda976f8ace84a7f74a08&target=https%3A%2F%2Flist.tmall.com%2Fsearch_produc

%3Ftbpm%3D1%26q%3D%25C5%25AE%25D7%25B0%26type%3Dp%26spm%3Da220m.1000858.a22

d100%26from%3D.list.pc_1_searchbutton&pacc=RRsp0ixWwD7auxG1xr9HDg==&opi=59.

.222&tmsc=1444908006341549> from <GET https://login.taobao.com/jump?target=

%3A%2F%2Flist.tmall.com%2Fsearch_product.htm%3Ftbpm%3D1%26q%3D%25C5%25AE%25

B0%26type%3Dp%26spm%3Da220m.1000858.a2227oh.d100%26from%3D.list.pc_1_search

n>

请仔细的讲解怎么找天猫的cookie,非科班出生编程的我,实在是太小白了

python 天猫 重定向 cookie div xpath extract amp scrapy item 查找 信息

Answers

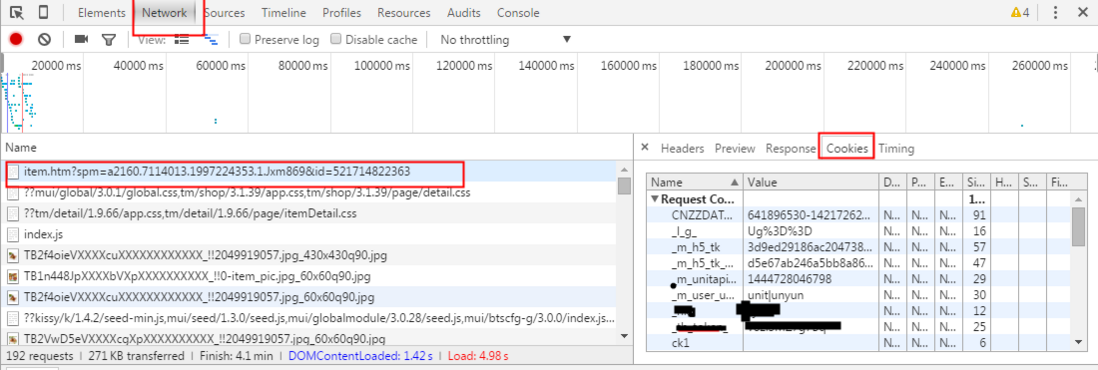

1. 在浏览器里登陆 天猫的账号

2. 登陆成功后, 使用Fiddler捕获你在浏览网页里的HTTP请求.

3. 在请求头中找到

Cookie

节点, 它后面的内容就是你所需要的数据了.

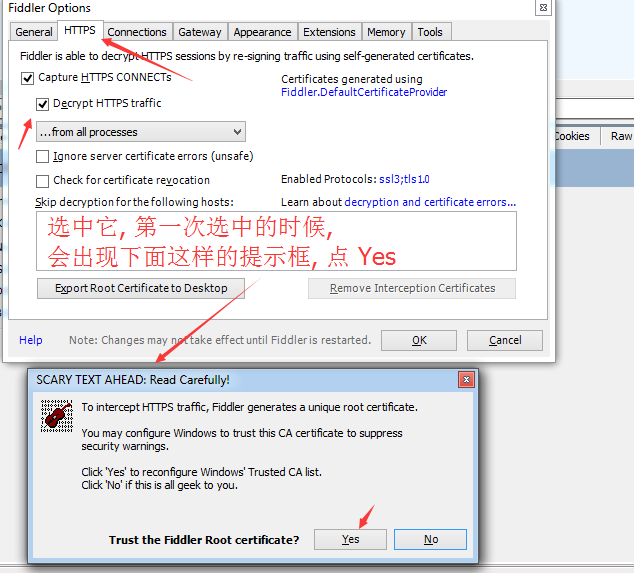

因为 天猫 使用的是HTTPS协议, 所以想在Fiddler中看到HTTPS的数据的话, 需要做如下操作:

1.

Tools

->

Fiddler Options

2.

HTTPS

->

Decrypt HTTPS traffic

.

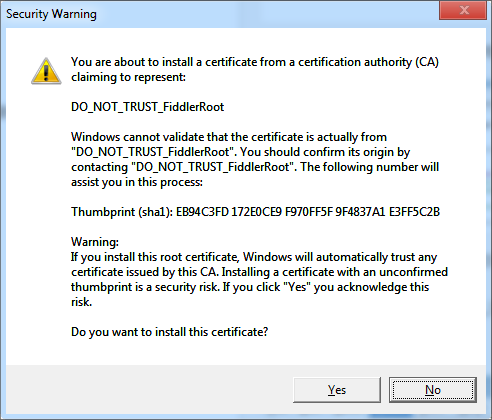

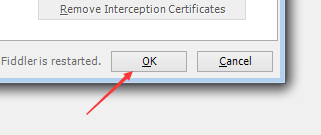

在点了

Yes

之后会再次出来一个提示框, 选择

Yes

之后再点

Fiddler的下载地址:

http://www.telerik.com/download/fiddler