爬取天猫为何加了cookie也爬取不下信息

# -*- coding: utf-8 -*-

import scrapy

from topgoods.items import TopgoodsItem

class TmGoodsSpider(scrapy.Spider):

name = "tm_goods"

allowed_domains = ["http://www.tmall.com"]

start_urls = (

'http://list.tmall.com/search_product.htm?type=pc&totalPage=100&cat=50025135&sort=d&style=g&from=sn_1_cat-qp&active=1&jumpto=10#J_Filter',

)

def start_requests(self):

url = "http://list.tmall.com/search_product.htm?type=pc&totalPage=100&cat=50025135&sort=d&style=g&from=sn_1_cat-qp&active=1&jumpto=10#J_Filter"

cookie_str = {

'_med=dw:1366&dh:768&pw:1366&ph:768&ist:0; cq=ccp%3D1; isg=C6663DCE197F720203B92624681E4B8C; l=AoeH66TTsi4Uak-SSaRFZVakVzRRjFtu; cna=SmmqDk4Ey1oCATtNKm4+v1fc; _tb_token_=Rgq87NAbuYsOqd; ck1=;'#cookie有改动,不是原cookie

}

return [

scrapy.Request(url,cookies=cookies_str),

]

#记录处理的页数

count=0

def parse(self, response):

TmGoodsSpider.count += 1

divs = response.xpath("//div[@id='J_ItemList']/div[@class='product']/div")

if not divs:

self.log( "List Page error--%s"%response.url )

for div in divs:

item=TopgoodsItem()

#商品价格

item["GOODS_PRICE"] = div.xpath("p[@class='productPrice']/em/@title")[0].extract()

#商品名称

item["GOODS_NAME"] = div.xpath("p[@class='productTitle']/a/@title")[0].extract()

#商品连接

pre_goods_url = div.xpath("p[@class='productTitle']/a/@href")[0].extract()

item["GOODS_URL"] = pre_goods_url if "http:" in pre_goods_url else ("http:"+pre_goods_url)

yield scrapy.Request(url=item["GOODS_URL"],meta={'item':item},callback=self.parse_detail,

dont_filter=True)

def parse_detail(self,response):

div = response.xpath('//div[@class="extend"]/ul')

if not div:

self.log( "Detail Page error--%s"%response.url )

item = response.meta['item']

div=div[0]

#店铺名称

item["SHOP_NAME"] = div.xpath("li[1]/div/a/text()")[0].extract()

#店铺连接

item["SHOP_URL"] = div.xpath("li[1]/div/a/@href")[0].extract()

#公司名称

item["COMPANY_NAME"] = div.xpath("li[3]/div/text()")[0].extract().strip()

#公司所在地

item["COMPANY_ADDRESS"] = div.xpath("li[4]/div/text()")[0].extract().strip()

yield item

感谢小秦大神的回答

怎么查找天猫的cookie

,但是我加了cookie好像还是报错了,不知道是哪里不对

报错代码:

10-18 20:05:44 [scrapy] INFO: Scrapy 1.0.3 started (bot: topgoods)

10-18 20:05:44 [scrapy] INFO: Optional features available: ssl, http11

10-18 20:05:44 [scrapy] INFO: Overridden settings: {'NEWSPIDER_MODULE': 'to

s.spiders', 'FEED_FORMAT': 'csv', 'SPIDER_MODULES': ['topgoods.spiders'], '

URI': 'abc.csv', 'BOT_NAME': 'topgoods'}

10-18 20:05:45 [scrapy] INFO: Enabled extensions: CloseSpider, FeedExporter

netConsole, LogStats, CoreStats, SpiderState

10-18 20:05:46 [scrapy] INFO: Enabled downloader middlewares: HttpAuthMiddl

, DownloadTimeoutMiddleware, UserAgentMiddleware, RetryMiddleware, DefaultH

sMiddleware, MetaRefreshMiddleware, HttpCompressionMiddleware, RedirectMidd

e, CookiesMiddleware, HttpProxyMiddleware, ChunkedTransferMiddleware, Downl

Stats

10-18 20:05:46 [scrapy] INFO: Enabled spider middlewares: HttpErrorMiddlewa

ffsiteMiddleware, RefererMiddleware, UrlLengthMiddleware, DepthMiddleware

10-18 20:05:46 [scrapy] INFO: Enabled item pipelines:

dled error in Deferred:

10-18 20:05:46 [twisted] CRITICAL: Unhandled error in Deferred:

10-18 20:05:46 [twisted] CRITICAL:

难道是piplines的问题:我没改原来就设置好的

class TopgoodsPipeline(object):

def process_item(self, item, spider):

return item

请各位大神在帮我看看代码,本人非科班小白,第一次用scrapy模拟登入。

Answers

哎...

仔细看了一下楼主的代码, 发现只是爬查询结果 ...

上代码:

#!/usr/bin/python

# -*- coding=utf-8 -*-

import urllib, urllib2, cookielib, sys, re

reload(sys)

sys.setdefaultencoding('utf-8')

__cookie = cookielib.CookieJar()

__req = urllib2.build_opener(urllib2.HTTPCookieProcessor(__cookie))

__req.addheaders = [

('Accept', 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8'),

('User-Agent', 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/46.0.2490.71 Safari/537.36')

]

urllib2.install_opener(__req)

def Get(url):

req = urllib2.Request(url)

return urllib2.urlopen(req).read()

url = 'https://list.tmall.com/search_product.htm?type=pc&totalPage=100&cat=50025135&sort=d&style=g&from=sn_1_cat-qp&active=1&jumpto=10'

html = Get(url).replace('&', '&')

reForProduct = re.compile('<div class="product " data-id="(\d+)"[\s\S]+?<p class="productPrice">\s+<em title="(.+?)">[\s\S]+?<p class="productTitle">\s+<a href="(.+?)" target="_blank" title="(.+?)"[\s\S]+?<\/div>\s+<\/div>')

#找到所有的 products

products = reForProduct.findall(html)

i = 0

reForShop = re.compile('<div class="extend">[\s\S]+?<a href="(.+?)".+?>(.+?)<\/a>[\s\S]+<div class="right">\s+(.+?)\s+<\/div>\s+<\/li>\s+<li class="locus">[\s\S]+?<div class="right">\s+(.+?)\s+<\/div>')

for (pid, price, url, title) in products:

if url.startswith('//'):

url = 'https:' + url

print ("%s\t%s\t%s\t%s") % (pid, price, title, url)

#仅为了演示, 此处只获取第一个搜索结果中的 店铺信息

if i < 4:

i = i + 1

shopDetail = Get(url)

shop = reForShop.findall(shopDetail)

(shopUrl, shopName, companyName, companyAddress) = shop[0]

if shopUrl.startswith('//'):

shopUrl = 'https:' + shopUrl

print ("%s\t%s\t%s\t%s") % (shopName, shopUrl, companyName, companyAddress)

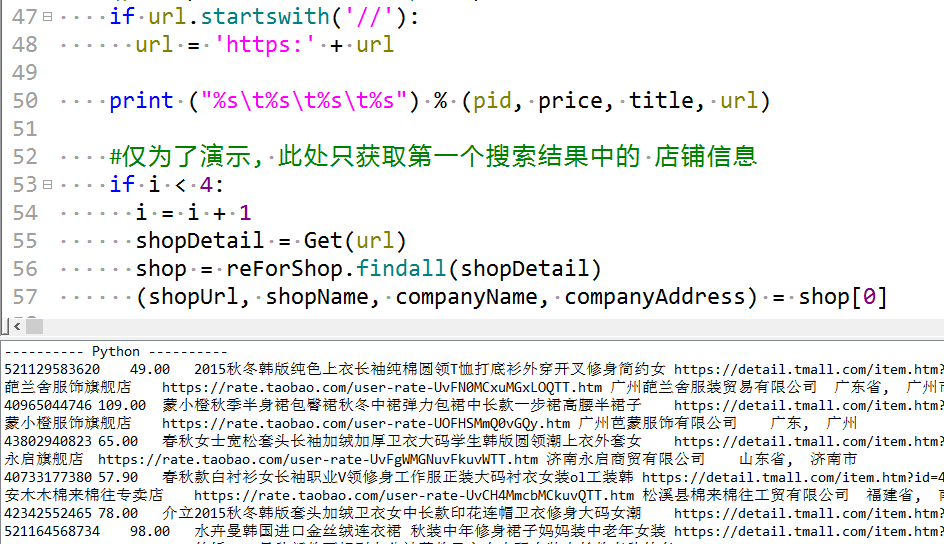

运行结果截图:

不了解楼主所用的

scrapy

是个什么东东, 所以你那代码帮不上忙, 而且好像你这个只是你的代码出错, 并不是返回不了内容(因为还没到那一步).